This post is also available in other languages:

The upcoming version 1.11 of the Go programming language will bring experimental support for modules, a new dependency management system for Go. A few days ago, I wrote a quick post about it. Since that post went live, things changed a bit and as we’re now very close to the new release, I thought it would be a good time for another post with a more hands-on approach. So here’s what we’ll do: we’ll create a new package and then we’ll make a few releases to see how that would work.

Creating a Module

So first things first. Let’s create our package. We’ll call it “testmod”. An important detail here: this directory should be outside your $GOPATH because by default, the modules support is disabled inside it. Go modules is a first step in potentially eliminating $GOPATH entirely at some point.

$ mkdir testmod

$ cd testmod

Our package is very simple:

package testmod

import "fmt"

// Hi returns a friendly greeting

func Hi(name string) string {

return fmt.Sprintf("Hi, %s", name)

}

The package is done but it is still not a module. Let’s change that.

$ go mod init github.com/robteix/testmod

go: creating new go.mod: module github.com/robteix/testmod

This creates a new file named go.mod in the package directory with the following contents:

module github.com/robteix/testmod

Not a lot here, but this effectively turns our package into a module. We can now push this code to a repository:

$ git init

$ git add *

$ git commit -am "First commit"

$ git push -u origin master

Until now, anyone willing to use this package would go get it:

$ go get github.com/robteix/testmod

And this would fetch the latest code in master. This still works, but we should probably stop doing that now that we have a Better Way™. Fetching master is inherently dangerous as we can never know for sure that the package authors didn’t make change that will break our usage. That’s what modules aims at fixing.

Quick Intro to Module Versioning

Go modules are versioned, and there are some particularities with regards to certain versions. You will need to familiarize yourself with the concepts behind semantic versioning.

More importantly, Go will use repository tags when looking for versions, and some versions are different of others: e.g. versions 2 and greater should have a different import path than versions 0 and 1 (we’ll get to that.) As well, by default Go will fetch the latest tagged version available in a repository. This is an important gotcha as you may be used to working with the master branch. What you need to keep in mind for now is that to make a release of our package, we need to tag our repository with the version. So let’s do that.

Making our first release

Now that our package is ready, we can release it to the world. We do this by using version tags. Let’s release our version 1.0.0:

$ git tag v1.0.0

$ git push --tags

This creates a tag on my Github repository marking the current commit as being the release 1.0.0.

Go doesn’t enforce that in any way, but a good idea is to also create a new branch (“v1”) so that we can push bug fixes to.

$ git checkout -b v1

$ git push -u origin v1

Now we can work on master without having to worry about breaking our release.

Using our module

Now we’re ready to use the module. We’ll create a simple program that will use our new package:

package main

import (

"fmt"

"github.com/robteix/testmod"

)

func main() {

fmt.Println(testmod.Hi("roberto"))

}

Until now, you would do a go get github.com/robteix/testmod to download the package, but with modules, this gets more interesting. First we need to enable modules in our new program.

$ go mod init mod

As you’d expect from what we’ve seen above, this will have created a new go.mod file with the module name in it:

module mod

Things get much more interesting when we try to build our new program:

$ go build

go: finding github.com/robteix/testmod v1.0.0

go: downloading github.com/robteix/testmod v1.0.0

As we can see, the go command automatically goes and fetches the packages imported by the program. If we check our go.mod file, we see that things have changed:

module mod

require github.com/robteix/testmod v1.0.0

And we now have a new file too, named go.sum, which contains hashes of the packages, to ensure that we have the correct version and files.

github.com/robteix/testmod v1.0.0 h1:9EdH0EArQ/rkpss9Tj8gUnwx3w5p0jkzJrd5tRAhxnA=

github.com/robteix/testmod v1.0.0/go.mod h1:UVhi5McON9ZLc5kl5iN2bTXlL6ylcxE9VInV71RrlO8=

Making a bugfix release

Now let’s say we realized a problem with our package: the greeting is missing ponctuation! People are mad because our friendly greeting is not friendly enough. So we’ll fix it and release a new version:

// Hi returns a friendly greeting

func Hi(name string) string {

- return fmt.Sprintf("Hi, %s", name)

+ return fmt.Sprintf("Hi, %s!", name)

}

We made this change in the v1 branch because it’s not relevant for what we’ll do for v2 later, but in real life, maybe you’d do it in master and then back-port it. Either way, we need to have the fix in our v1 branch and mark it as a new release.

$ git commit -m "Emphasize our friendliness" testmod.go

$ git tag v1.0.1

$ git push --tags origin v1

Updating modules

By default, Go will not update modules without being asked. This is a Good Thing™ as we want predictability in our builds. If Go modules were automatically updated every time a new version came out, we’d be back in the uncivilized age pre-Go1.11. No, we need to tell Go to update a modules for us.

We do this by using our good old friend go get:

- run

go get -u to use the latest minor or patch releases (i.e. it would update from 1.0.0 to, say, 1.0.1 or, if available, 1.1.0) - run

go get -u=patch to use the latest patch releases (i.e., would update to 1.0.1 but not to 1.1.0) - run

go get package@version to update to a specific version (say, github.com/robteix/testmod@v1.0.1)

In the list above, there doesn’t seem to be a way to update to the latest major version. There’s a good reason for that, as we’ll see in a bit.

Since our program was using version 1.0.0 of our package and we just created version 1.0.1, any of the following commands will update us to 1.0.1:

$ go get -u

$ go get -u=patch

$ go get github.com/robteix/testmod@v1.0.1

After running, say, go get -u our go.mod is changed to:

module mod

require github.com/robteix/testmod v1.0.1

Major versions

According to semantic version semantics, a major version is different than minors. Major versions can break backwards compatibility. From the point of view of Go modules, a major version is a different package completely. This may sound bizarre at first, but it makes sense: two versions of a library that are not compatible with each other are two different libraries.

Let’s make a major change in our package, shall we? Over time, we realized our API was too simple, too limited for the use cases of our users, so we need to change the Hi() function to take a new parameter for the greeting language:

package testmod

import (

"errors"

"fmt"

)

// Hi returns a friendly greeting in language lang

func Hi(name, lang string) (string, error) {

switch lang {

case "en":

return fmt.Sprintf("Hi, %s!", name), nil

case "pt":

return fmt.Sprintf("Oi, %s!", name), nil

case "es":

return fmt.Sprintf("¡Hola, %s!", name), nil

case "fr":

return fmt.Sprintf("Bonjour, %s!", name), nil

default:

return "", errors.New("unknown language")

}

}

Existing software using our API will break because they (a) don’t pass a language parameter and (b) don’t expect an error return. Our new API is no longer compatible with version 1.x so it’s time to bump the version to 2.0.0.

I mentioned before that some versions have some peculiarities, and this is the case now. Versions 2 and over should change the import path. They are different libraries now.

We do this by appending a new version path to the end of our module name.

module github.com/robteix/testmod/v2

The rest is the same as before, we push it, tag it as v2.0.0 (and optionally create a v2 branch.)

$ git commit testmod.go -m "Change Hi to allow multilang"

$ git checkout -b v2 # optional but recommended

$ echo "module github.com/robteix/testmod/v2" > go.mod

$ git commit go.mod -m "Bump version to v2"

$ git tag v2.0.0

$ git push --tags origin v2 # or master if we don't have a branch

Updating to a major version

Even though we have released a new incompatible version of our library, existing software will not break, because it will continue to use the existing version 1.0.1. go get -u will not get version 2.0.0.

At some point, however, I, as the library user, may want to upgrade to version 2.0.0 because maybe I was one of those users who needed multi-language support.

I do it but modifying my program accordingly:

package main

import (

"fmt"

"github.com/robteix/testmod/v2"

)

func main() {

g, err := testmod.Hi("Roberto", "pt")

if err != nil {

panic(err)

}

fmt.Println(g)

}

And then when I run go build, it will go and fetch version 2.0.0 for me. Notice how even though the import path ends with “v2”, Go will still refer to the module by its proper name (“testmod”).

As I mentioned before, the major version is for all intents and purposes a completely different package. Go modules does not link the two at all. That means we can use two incompatible versions in the same binary:

package main

import (

"fmt"

"github.com/robteix/testmod"

testmodML "github.com/robteix/testmod/v2"

)

func main() {

fmt.Println(testmod.Hi("Roberto"))

g, err := testmodML.Hi("Roberto", "pt")

if err != nil {

panic(err)

}

fmt.Println(g)

}

This eliminates a common problem with dependency management: when dependencies depend on different versions of the same library.

Tidying it up

Going back to the previous version that uses only testmod 2.0.0, if we check the contents of go.mod now, we’ll notice something:

module mod

require github.com/robteix/testmod v1.0.1

require github.com/robteix/testmod/v2 v2.0.0

By default, Go does not remove a dependency from go.mod unless you ask it to. If you have dependencies that you no longer use and want to clean up, you can use the new tidy command:

$ go mod tidy

Now we’re left with only the dependencies that are really being used.

Vendoring

Go modules ignores the vendor/ directory by default. The idea is to eventually do away with vendoring[^0]. But if we still want to add vendored dependencies to our version control, we can still do it:

$ go mod vendor

This will create a vendor/ directory under the root of your project containing the source code for all of your dependencies.

Still, go build will ignore the contents of this directory by default. If you want to build dependencies from the vendor/ directory, you’ll need to ask for it.

$ go build -mod vendor

I expect many developers willing to use vendoring will run go build normally on their development machines and use -mod vendor in their CI.

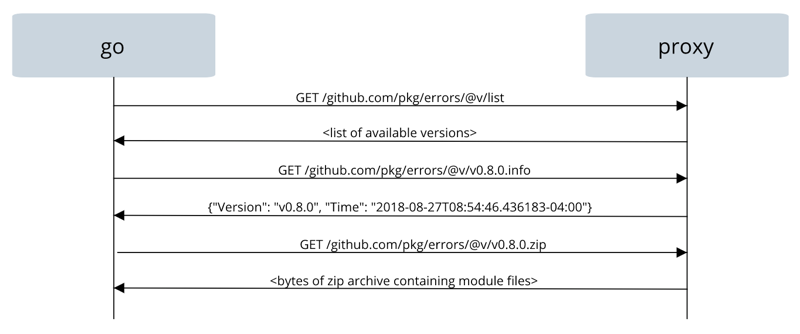

Again, Go modules is moving away from the idea of vendoring and towards using a Go module proxy for those who don’t want to depend on the upstream version control services directly.

There are ways to guarantee that go will not reach the network at all (e.g. GOPROXY=off) but these are the subject for a future blog post.

Conclusion

This post may seem a bit daunting, but I tried to explain a lot of things together. The reality is that now Go modules is basically transparent. We import package like always in our code and the go command will take care of the rest.

When we build something, the dependencies will be fetched automatically. It also eliminates the need to use $GOPATH which was a roadblock for new Go developers who had trouble understanding why things had to go into a specific directory.

Vendoring is (unofficially) being deprecated in favour of using proxies.[^0] I may do a separate post about the Go module proxy. (Update: it’s live.) [^0]: I think this came out a bit too strong and people left with the impression that vendoring is being removed right now. It isn’t. Vendoring still works, albeit slightly different than before. There seems to be a desire to replace vendoring with something better, which may or may not be a proxy. But for now this is just it: a desire for a better solution. Vendoring is not going away until a good replacement is found (if ever.)